5.8 Determining an Appropriate vCPU-to-pCPU Ratio

In a virtual machine, processors are referred to as virtual CPUs or vCPUs. When the vSphere administrator adds vCPUs to a virtual machine, each of those vCPUs is assigned to a physical CPU (pCPU), although the actual pCPU might not always be the same. There must always be enough pCPUs available to support the number of vCPUs assigned to a single virtual machine or the virtual machine will not boot.

However, one of the major advantages of vSphere virtualization is the ability to oversubscribe, so there is of course no 1:1 ratio between the number of vCPUs that can be assigned to virtual machines and the number of physical CPUs in the host. For vSphere 6.0, there is a maximum of 32 vCPUs per physical core, and vSphere administrators can allocate up to 4,096 vCPUs to virtual machines on a single host, although the actual achievable number of vCPUs per core depends on the workload and specifics of the hardware. For more information, see the latest version of the

VMware Performance Best Practices for VMware vSphere 5.5 at

http://www.vmware.com/pdf/Perf_Best_Practices_vSphere5.5.pdf.

For every workload beyond a 1:1 vCPU to pCPU ratio to get processor time, the vSphere hypervisor must invoke processor scheduling to distribute processor time to virtual machines that need it. Therefore, if the vSphere administrator has created a 5:1 vCPU to pCPU ratio, each processor is supporting five vCPUs. The higher the ratio becomes, the higher the performance impact will be, because you have to account for the length of time that a virtual machine has to wait for physical processors to become available. The metric that is by far the most useful when looking at CPU oversubscription, and when determining how long virtual machines have to wait for processor time, is CPU Ready.

The vCPU-to-pCPU ratio to aim to achieve in your design depends upon the application you are virtualizing. In the absence of any empirical data, which is generally the case on a heterogeneous cloud platform, it is a good practice, through the use of templates and blueprints, to encourage your service consumers to start with a single vCPU and scale out when it is necessary. While multiple vCPUs are great for workloads that support parallelization, this is counterproductive in the case for applications that do not have built in multi-threaded structures. Therefore, while a virtual machine with 4 vCPUs will require the hypervisor to wait for 4 pCPUs to become available, on a particularly busy ESXi host with other virtual machines, this could take significantly longer than if the VM in question only had a single vCPU. This performance impact is further extended as the vSphere ESXi scheduling mechanism prefers to use the same vCPU-to-pCPU mapping to boost performance through CPU caching on the socket.

Service providers must, where possible, try to educate their consumers on provisioning virtual machines with the correct resources, rather than indiscriminately following physical server specifications that software vendors often refer to in their documentation. In the event that they have no explicate requirements, advise the consumer to start with a single vCPU if possible, and then scale up once they have the metric information on which to base an informed decision.

So that the vCPU-to-pCPU ratio is optimized and you are able to take full advantage of the benefits of over provisioning, in an ideal world you would first engage in dialog with the consumers and application owners to understand the application’s workload prior to allocating virtual machine resources. However, in the world of shared platform and multitenant cloud computing, where this is unlikely to be the case, and the application workload will be unknown, it is critical to not overprovision virtual CPUs, and scale out only when it becomes necessary. Employing VMware vRealize Operations™ as a monitoring platform that can trend historical performance data and identify virtual machines with complex or mixed workloads is highly beneficial and its capacity planning functionality assists in determining when to add pCPUs. CPU Ready Time is the key metric to consider as well as CPU utilization. Correlating this with memory and network statistics, as well as SAN I/O and disk I/O metrics, enables the service provider to proactively avoid any bottlenecks and correctly size the VMware Cloud Provider Program platform to avoid performance penalizing or overprovisioning.

The actual achievable ratio in a specific environment depends on a number of factors:

• The vSphere version – The vSphere CPU scheduler is always being improved. The newer the version of vSphere, the more consolidation is possible.

• Processor age – Newer processors are much more robust than older ones. With newer processors, service providers should be able to achieve higher vCPU:pCPU ratios.

• Workload type – Different kinds of workloads on the host platform will result in different ratios.

• As a guideline, the following vCPU:pCPU ratios can be considered a good starting point for a design:

o 1:1 to 3:1 is not typically an issue

o With 3:1 to 5:1, you might begin to see performance degradation

o 6:1 or greater is often going to cause a significant problem for VM performance

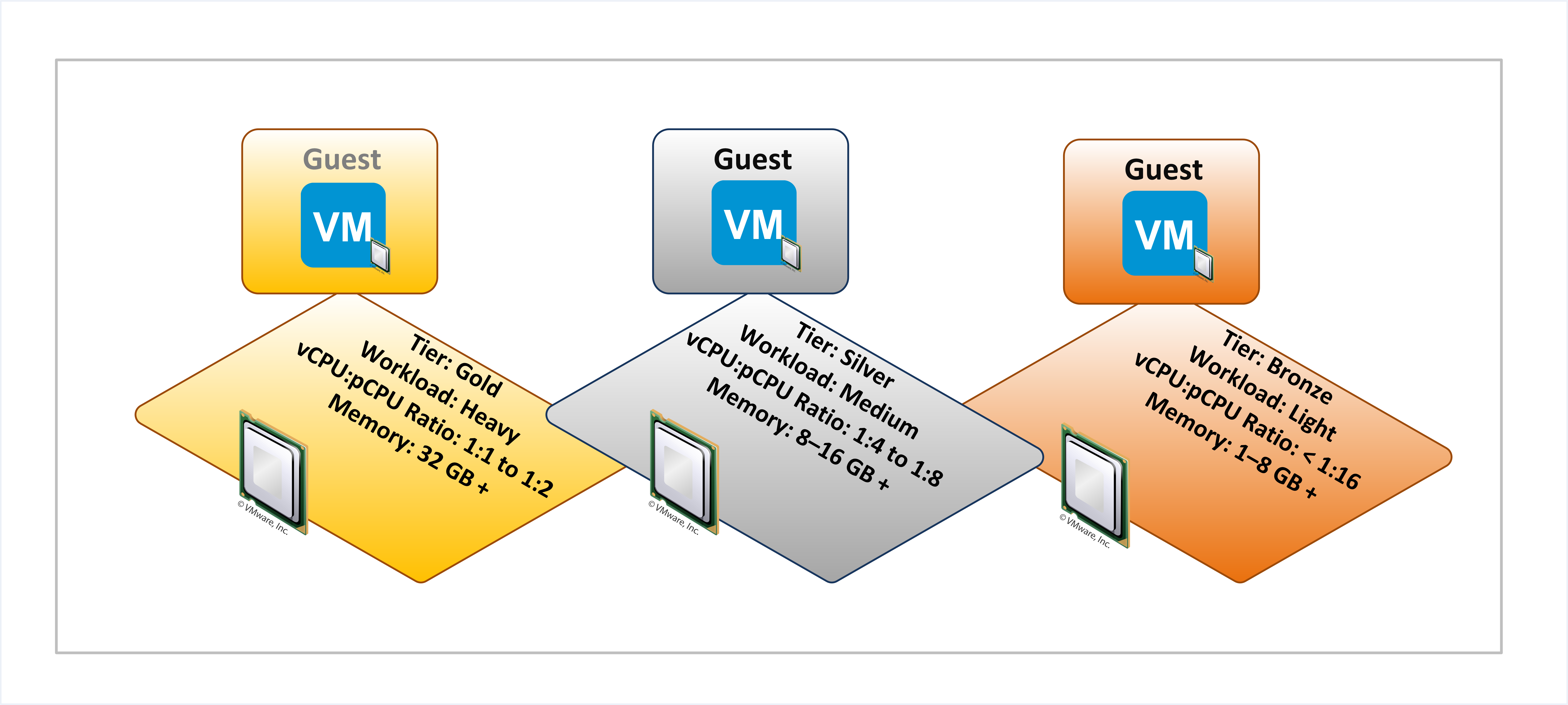

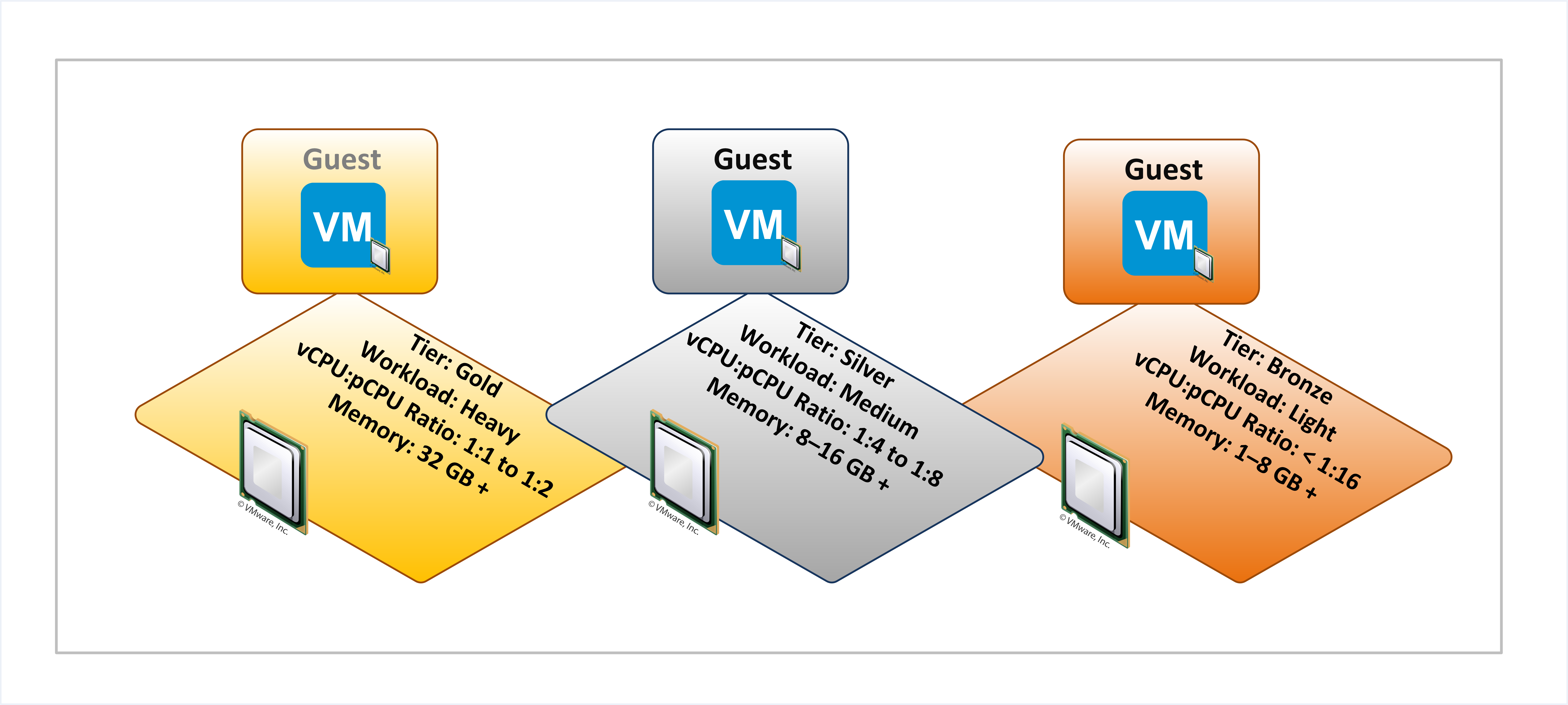

The use of vCPU-to-pCPU ratios can also form part of a tiered service offering. For instance, offering lower CPU consolidation ratios on higher tiers of service or the reverse. Use the following figure as a starting point to calculate consolidation ratios in a VMware Cloud Provider Program design, but remember for every single rule there are exceptions and calculating specific requirements for your tenants is key to a successful architecture. This figure is for initial guidance only.

Figure 14. Virtual CPU-to-Physical CPU Ratio

As a general guideline, attempt to keep the CPU Ready metric at 5 percent or below. For most types of platforms, this is considered a good practice.