Background

It has been demonstrated how vCenter Site Recovery Manager, in conjunction with some complementary custom automation, can be used to offer a vCloud DR solution that enables recovery of a vCloud Director solution at a recovery site.

In cases where stretched Layer 2 networks are present, the recovery of vApps is greatly simplified because vApps can remain connected to the same logically defined virtual networks, regardless of the physical location in which the vApps are running.

The existing vCloud disaster recovery process, while theoretically capable of supporting designs that do not include stretched Layer 2 networks, does not lend itself well to this configuration. The primary issue is the requirement to update the network configuration of all vApps. The complexity associated with the reconfiguration of vApp networking is influenced by a number of factors including:

Type of networks to which a vApp is connected (organization

virtual datacenter, organization external, or vApp).

Routing configuration of the networks to which the vApp is connected (NAT

Routing configuration of the networks to which the vApp is connected (NAT routed or direct).

Firewall and/or NAT configuration defined on

Firewall and/or NAT configuration defined on vCloud Networking and Security

Edge devices (NAT routed).

Quantity of networks to which the vApp is connected.

When connected to an organization virtual datacenter network, there is little or no impact. The vApp can retain its initial configuration, as there are no dependencies upon the physical network. This is not the case for organization external networks.

In the case of vApps connected to an organization external network that is directly connected, the current vCloud disaster recovery process involves disconnection of vApps from the network for the production site and connection to an equivalent network for the recovery site. For this to work, site-specific network configuration parameters such as netmask, gateway, and DNS must be defined. Following reconfiguration, external references to the vApps also need updating. This situation is further complicated when an organization external network has a routed connection. The complication arises from the multiple IP address changes taking place:

1. The vApp is allocated a new IP address from the new organization virtual datacenter network.

2. The associated external network has a different IP address.

The introduction of vApp networks can further complicate the process.

VXLAN makes it possible to the disaster recovery and multi-location implementation of vCloud Director. This is achieved by creating a Layer 2 overlay network without changing the Layer 3 interconnects that are already in place.

This section describes a test scenario in which a vCloud Director implementation based on vCenter Site Recovery Manager fails over without the need to reassign IP addresses to the virtual machines, and describes the scripted changes that must be done to simplify the process.

VXLAN for DR Architecture

To conduct the required testing, a sample architecture was deployed to simulate the process. In keeping with the reference infrastructure and methodology defined in the vCloud DR Solution Tech Guide, the test infrastructure constitutes a cluster that has ESXi members in both the primary and the recovery site. The premise is the workloads run in the primary site where the vSphere hosts are Connected.

At the recovery site,

the vSphere hosts

are in m

aintenance m

ode, but configured in the same cluster and attached to all of the same vSphere Distributed Switches.

The solution approach considered in the following sections is developed based on

Overview of Disaster Recovery in vCloud Director, (

http://communities.vmware.com/docs/DOC-18861).

All prerequisites

for this solution continue to

apply. Logical Infrastructure

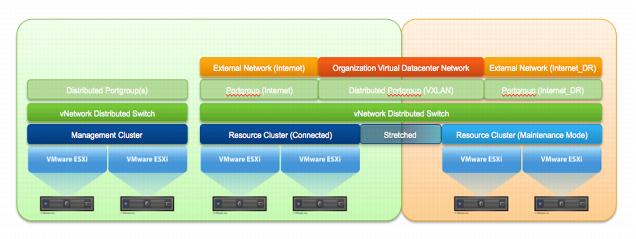

To address the complexities of recovering a vApp from the production site to the recovery site in the absence of stretched Layer 2 networking, a mechanism is required to abstract the underlying IP address changes from the recovered vApps. The following diagram provides a logical overview of the deployment infrastructure.

Figure 71. Logical View of Infrastructure

In the resource cluster, all vSphere hosts are connected to a common vSphere Distributed Switch with site-specific port groups defined for the Internet and Internet_DR networks. In vCloud Director, the Internet and Internet_DR port groups are defined as external networks. An organization virtual datacenter network is defined in conjunction with this, and a port group from the VXLAN network pool is deployed.

The vSphere hosts deployed in the production site are connected to a common Layer 3 management network. Similarly, the vSphere hosts deployed in the recovery site are connected to a common Layer 3 management network, albeit in a different Layer 3 than that of the network for the production site. The Internet external networks are the primary networks that will be used for vApp connectivity, and they are also in a different Layer 3 than the Internet network available in the recovery site. These are attached to vCloud Director as two distinct external networks.

vCloud Networking and Security Edge firewall rules, NAT translations, load balancer configurations, and VPN configurations must be duplicated to cover the disparate production and failover address spaces. There are two options for keeping the configurations in sync:

Option 1: Maintain the configuration for both sites at the same time.

Advantages

– Simplifies failover as configurations are already in place.

Disadvantages:

Requires the organization

administrator

to be diligent in maintaining the configurations.

Difficult to troubleshoot

Difficult to troubleshoot if there is a

configuration mismatch.

Primary interface needs

to be removed if hosts on the original Layer

2 primary network need to be reachable.Option 2: Use the API upon failover to duplicate the primary site configuration to the failover site.

Advantages:

No maintenance after the

No maintenance after the initial failover address space metadata has been populated.

Address mapping can be done and allocated in advance.

Disadvantages:

Must

have failover address metadata specified to work.

Address size needs match to simplify mapping.

Address pool size needs to match to simplify mapping.

Leveraging VXLAN can greatly simplify the deployment of vCloud Director DR solutions in the absence of stretched Layer 2 networking. Furthermore, this type of networking topology is complimentary to the solution defined in the vCloud DR Solution Tech Guide and can be implemented with relatively few additions to the existing vCloud DR recovery process.

Following the successful recovery of a vCloud Director management cluster, some additional steps need to be included in the recovery of resource clusters to facilitate the recovery of edge gateway appliances and vApps. See the VXLAN Example in Implementation Examples.

VXLAN for DR Design Implications

Recovery hosts must be in maintenance mode so that virtual machines do not end up running in the recovery site and generating traffic between the recovery site and the primary site. The reason for this is that the vCloud Networking and Security Edge device is available in only one site at a time. Having the hosts in maintenance mode also keeps them in sync, with all the changes that happen in the primary set.

If an organization has organization virtual datacenter networks that are directly attached the process is as outlined in the existing vCloud DR recovery process. All of the vApps on that network need to be re-IPed to the correct addressing used in the recovery site Layer 3 network.

However, if the organization is using isolated networks that are VLAN-backed, they need to be recreated on the recovery site using the associated VLAN IDs available in the recovery site and the vApps that are reconnected to the new network. If they were port group-backed, the port groups still exist in the recovery site, but their definitions need to be revisited to verify that they were valid from a configuration point of view. Ease of recovery is afforded by using VXLAN backed networks outlined in this scenario, be they NAT routed or isolated.

References